[ad_1]

search engine optimization is a fancy, huge, and typically mysterious observe. There are numerous points to search engine optimization that may result in confusion.

Not everybody will agree with what search engine optimization entails – the place technical search engine optimization stops and improvement begins.

What additionally doesn’t assistance is the huge quantity of misinformation that goes round. There are numerous “specialists” on-line and never all of them ought to bear that self-proclaimed title. How are you aware who to belief?

Even Google staff can typically add to the confusion. They wrestle to outline their very own updates and methods and typically provide recommendation that conflicts with beforehand given statements.

The Risks Of search engine optimization Myths

The problem is that we merely don’t know precisely how the various search engines work. On account of this, a lot of what we do as search engine optimization professionals is trial and error and educated guesswork.

If you find yourself studying about search engine optimization, it may be tough to check out all of the claims you hear.

That’s when the search engine optimization myths start to take maintain. Earlier than you recognize it, you’re proudly telling your line supervisor that you simply’re planning to “AI Overview optimize” your web site copy.

search engine optimization myths will be busted numerous the time with a pause and a few consideration.

How, precisely, would Google be capable to measure that? Would that truly profit the top consumer in any approach?

There’s a hazard in search engine optimization of contemplating the various search engines to be all-powerful, and due to this, wild myths about how they perceive and measure our web sites begin to develop.

What Is An search engine optimization Delusion?

Earlier than we debunk some frequent search engine optimization myths, we should always first perceive what kinds they take.

Untested Knowledge

Myths in search engine optimization are inclined to take the type of handed-down knowledge that isn’t examined.

Consequently, one thing that may properly don’t have any impression on driving certified natural visitors to a website will get handled prefer it issues.

Minor Elements Blown Out Of Proportion

search engine optimization myths may additionally be one thing that has a small impression on natural rankings or conversion however are given an excessive amount of significance.

This is perhaps a “tick field” train that’s hailed as being a essential think about search engine optimization success, or just an exercise that may solely trigger your website to eke forward if the whole lot else together with your competitors was actually equal.

Outdated Recommendation

Myths can come up just because what was efficient in serving to websites rank and convert properly now not does however continues to be being suggested. It is perhaps that one thing used to work rather well.

Over time, the algorithms have grown smarter. The general public is extra hostile to being marketed to.

Merely, what was as soon as good recommendation is now defunct.

Google Being Misunderstood

Many instances, the beginning of a fantasy is Google itself.

Sadly, a barely obscure or simply not easy piece of recommendation from a Google consultant will get misunderstood and run away with.

Earlier than we all know it, a brand new optimization service is being bought off the again of a flippant remark a Googler made in jest.

search engine optimization myths will be based mostly on truth, or maybe these are, extra precisely, search engine optimization legends?

Within the case of Google-born myths, it tends to be that the very fact has been so distorted by the search engine optimization trade’s interpretation of the assertion that it now not resembles helpful info.

26 Widespread search engine optimization Myths

So, now that we all know what causes and perpetuates search engine optimization myths, let’s discover out the reality behind a number of the extra frequent ones.

1. The Google Sandbox And Honeymoon Results

Some search engine optimization professionals consider that Google will mechanically suppress new web sites within the natural search outcomes for a time period earlier than they can rank extra freely.

Others counsel there’s a kind of Honeymoon Interval, throughout which Google will rank new content material extremely to check what customers consider it.

The content material could be promoted to make sure extra customers see it. Indicators like click-through charge and bounces again to the search engine outcomes pages (SERPs) would then be used to measure if the content material is properly acquired and deserves to stay ranked extremely.

There’s, nonetheless, the Google Privateness Sandbox. That is designed to assist preserve peoples’ privateness on-line. This can be a completely different sandbox from the one which allegedly suppresses new web sites.

When requested particularly in regards to the Honeymoon Impact and the rankings Sandbox, John Mueller answered:

“Within the search engine optimization world, that is typically known as form of like a sandbox the place Google is like protecting issues again to forestall new pages from displaying up, which isn’t the case.

Or some folks name it just like the honeymoon interval the place new content material comes out and Google actually loves it and tries to market it.

And it’s once more not the case that we’re explicitly attempting to advertise new content material or demote new content material.

It’s simply, we don’t know and we now have to make assumptions.

After which typically these assumptions are proper and nothing actually adjustments over time.

Typically issues cool down a little bit bit decrease, typically a little bit bit increased.”

So, there isn’t a systematic promotion or demotion of latest content material by Google, however what you is perhaps noticing is that Google’s assumptions are based mostly on the remainder of the web site’s rankings.

- Verdict: Formally? It’s a fantasy.

2. Duplicate Content material Penalty

This can be a fantasy that I hear lots. The concept is that if in case you have content material in your web site that’s duplicated elsewhere on the internet, Google will penalize you for it.

The important thing to understanding what is basically occurring right here is realizing the distinction between algorithmic suppression and guide motion.

A guide motion, the state of affairs that may end up in webpages being faraway from Google’s index, can be actioned by a human at Google.

The web site proprietor can be notified by means of Google Search Console.

An algorithmic suppression happens when your web page can not rank properly because of it being caught by a filter from an algorithm.

Basically, having copy that’s taken from one other webpage would possibly imply you’ll be able to’t outrank that different web page.

The major search engines could decide that the unique host of the copy is extra related to the search question than yours.

As there isn’t a profit to having each within the search outcomes, yours will get suppressed. This isn’t a penalty. That is the algorithm doing its job.

There are some content-related guide actions, however basically, copying one or two pages of another person’s content material will not be going to set off them.

It’s, nonetheless, probably going to land you in different bother if in case you have no authorized proper to make use of that content material. It can also detract from the worth your web site brings to the consumer.

What about content material that’s duplicated throughout your personal website? Mueller clarifies that duplicate content material will not be a unfavourable rating issue. If there are a number of pages with the identical content material, Google could select one to be the canonical web page, and the others is not going to be ranked.

3. PPC Promoting Helps Rankings

This can be a frequent fantasy. It’s additionally fairly fast to debunk.

The concept is that Google will favor web sites that spend cash with it by means of pay-per-click promoting. That is merely false.

Google’s algorithm for rating natural search outcomes is totally separate from the one used to find out PPC advert placements.

Working a paid search promoting marketing campaign by means of Google whereas finishing up search engine optimization would possibly profit your website for different causes, however it received’t instantly profit your rating.

4. Area Age Is A Rating Issue

This declare is seated firmly within the “complicated causation and correlation” camp.

As a result of a web site has been round for a very long time and is rating properly, age have to be a rating issue.

Google has debunked this fantasy itself many instances.

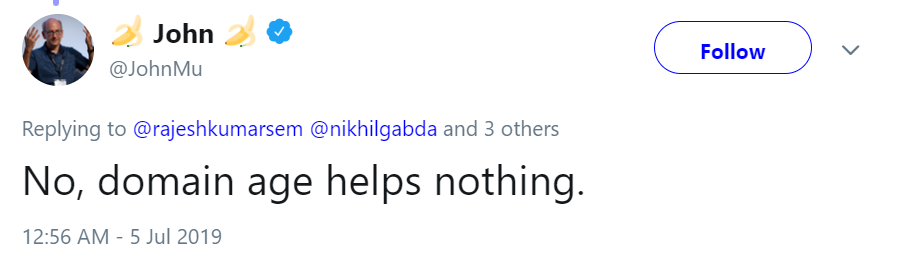

In July 2019, Mueller replied to a submit on Twitter.com (recovered by means of Wayback Machine) that recommended that area age was considered one of “200 indicators of rating” saying, “No, area age helps nothing.”

Picture from Twitter.com recovered by means of Wayback Machine, June 2024

Picture from Twitter.com recovered by means of Wayback Machine, June 2024The reality behind this fantasy is that an older web site has had extra time to do issues properly.

For example, a web site that has been reside and lively for 10 years could properly have acquired a excessive quantity of related backlinks to its key pages.

An internet site that has been working for lower than six months can be unlikely to compete with that.

The older web site seems to be rating higher, and the conclusion is that age have to be the figuring out issue.

5. Tabbed Content material Impacts Rankings

This concept is one which has roots going again a good distance.

The premise is that Google is not going to assign as a lot worth to the content material sitting behind a tab or accordion.

For instance, textual content that’s not viewable on the primary load of a web page.

Google once more debunked this fantasy in March 2020, however it has been a contentious thought amongst many search engine optimization professionals for years.

In September 2018, Gary Illyes, Webmaster Tendencies Analyst at Google, answered a tweet thread about utilizing tabs to show content material.

His response:

“AFAIK, nothing’s modified right here, Invoice: we index the content material, and its weight is absolutely thought of for rating, however it may not get bolded within the snippets. It’s one other, extra technical query of how that content material is surfaced by the positioning. Indexing does have limitations.”

If the content material is seen within the HTML, there isn’t a motive to imagine that it’s being devalued simply because it isn’t obvious to the consumer on the primary load of the web page. This isn’t an instance of cloaking, and Google can simply fetch the content material.

So long as there’s nothing else that’s stopping the textual content from being considered by Google, it must be weighted the identical as copy, which isn’t in tabs.

Need extra clarification on this? Then try this SEJ article that discusses this topic intimately.

6. Google Makes use of Google Analytics Information In Rankings

This can be a frequent worry amongst enterprise homeowners.

They research their Google Analytics studies. They really feel their common sitewide bounce charge is just too excessive, or their time on web page is just too low.

So, they fear that Google will understand their website to be low high quality due to that. They worry they received’t rank properly due to it.

The parable is that Google makes use of the information in your Google Analytics account as a part of its rating algorithm.

It’s a fantasy that has been round for a very long time.

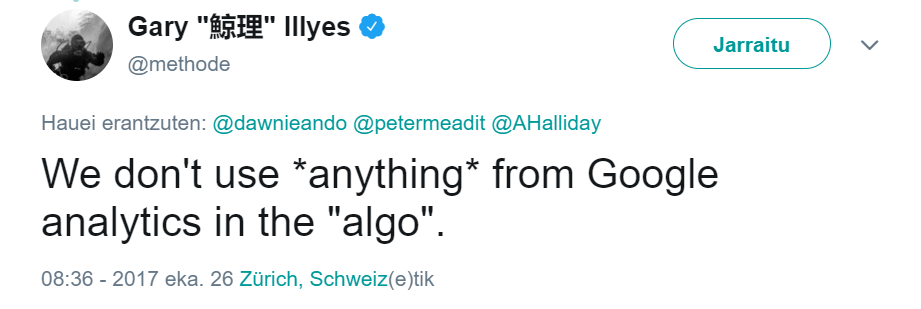

Illyes has once more debunked this concept merely with, “We don’t use *something* from Google analytics [sic] within the “algo.”

Picture from Twitter.com recovered by means of Wayback Machine, June 2024

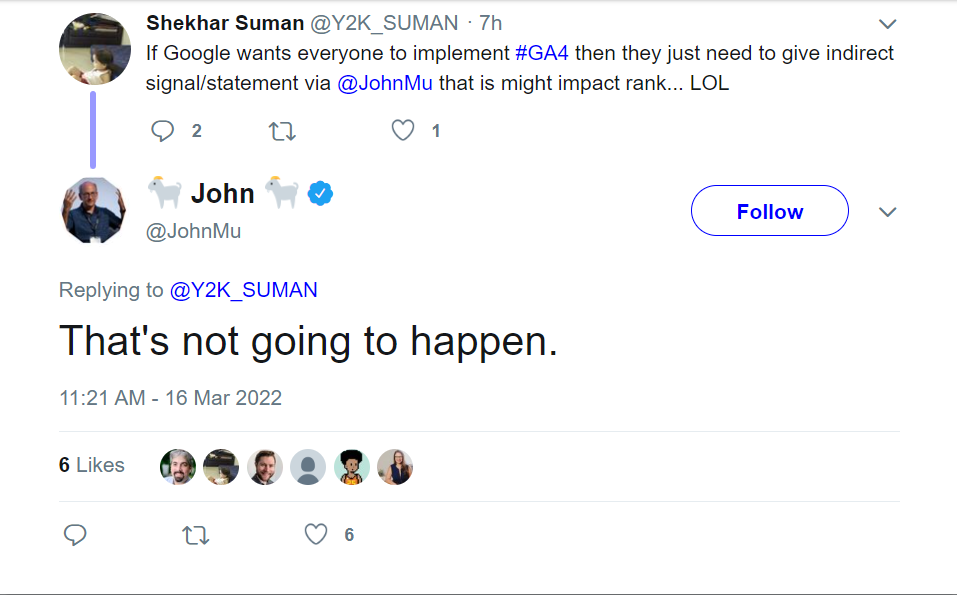

Picture from Twitter.com recovered by means of Wayback Machine, June 2024Extra just lately, John Mueller dispelled this concept but once more, saying, “That’s not going to occur” when he acquired the suggestion telling search engine optimization execs that GA4 is a rating issue would enhance its uptake.

Picture from Twitter.com recovered from Wayback Machine, June 2024

Picture from Twitter.com recovered from Wayback Machine, June 2024If we take into consideration this logically, utilizing Google Analytics knowledge as a rating issue could be actually arduous to police.

For example, utilizing filters might manipulate knowledge to make it appear to be the positioning was performing in a approach that it isn’t actually.

What is sweet efficiency anyway?

Excessive “time on web page” is perhaps good for some long-form content material.

Low “time on web page” may very well be comprehensible for shorter content material.

Is both one proper or incorrect?

Google would additionally want to know the intricate methods through which every Google Analytics account had been configured.

Some is perhaps excluding all identified bots, and others may not. Some would possibly use customized dimensions and channel groupings, and others haven’t configured something.

Utilizing this knowledge reliably could be extraordinarily difficult to do. Contemplate the a whole lot of hundreds of internet sites that use different analytics packages.

How would Google deal with them?

This fantasy is one other case of “causation, not correlation.”

A excessive sitewide bounce charge is perhaps indicative of a high quality downside, or it may not be. Low time on web page might imply your website isn’t partaking, or it might imply your content material is rapidly digestible.

These metrics offer you clues as to why you may not be rating properly, they aren’t the reason for it.

7. Google Cares About Area Authority

PageRank is a hyperlink evaluation algorithm utilized by Google to measure the significance of a webpage.

Google used to show a web page’s PageRank rating a quantity as much as 10 on its toolbar. It stopped updating the PageRank displayed in toolbars in 2013.

In 2016, Google confirmed that the PageRank toolbar metric was not going for use going ahead.

Within the absence of PageRank, many different third-party authority scores have been developed.

Generally identified ones are:

- Moz’s Area Authority and Web page Authority scores.

- Majestic’s Belief Move and Quotation Move.

- Ahrefs’ Area Ranking and URL Ranking.

Some search engine optimization execs use these scores to find out the “worth” of a web page.

That calculation can by no means be a wholly correct reflection of how a search engine values a web page, nonetheless.

search engine optimization execs will typically seek advice from the rating energy of a web site typically along with its backlink profile and this, too, is called the area’s authority.

You may see the place the confusion lies.

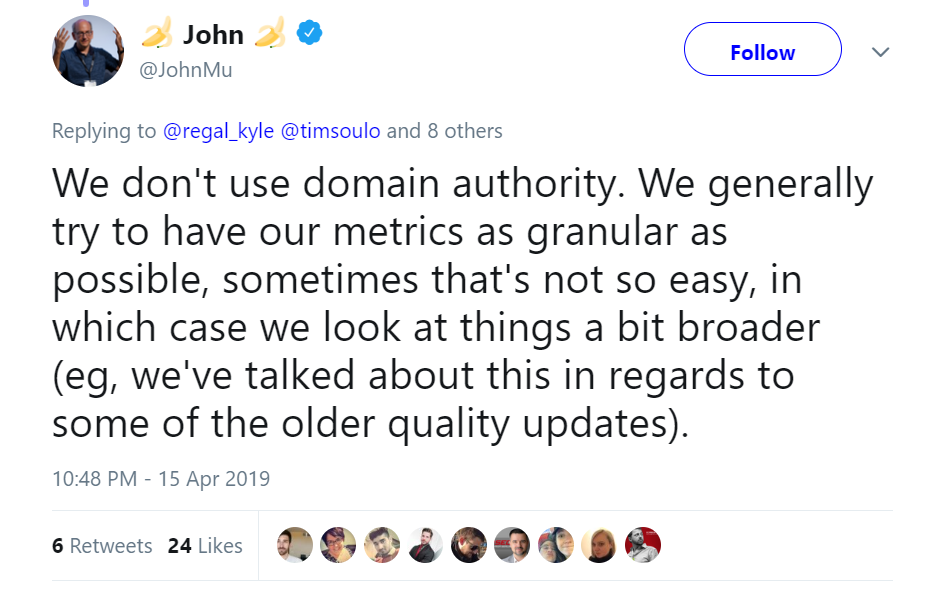

Google representatives have dispelled the notion of a site authority metric utilized by them.

“We don’t use area authority. We typically attempt to have our metrics as granular as potential, typically that’s not really easy, through which case we take a look at issues a bit broader (e.g., we’ve talked about this with regard to a number of the older high quality updates).”

Picture from Twitter.com recovered by means of Wayback Machine, June 2024

Picture from Twitter.com recovered by means of Wayback Machine, June 20248. Longer Content material Is Higher

You’ll have undoubtedly heard it stated earlier than that longer content material ranks higher.

Extra phrases on a web page mechanically make yours extra rank-worthy than your competitor’s. That is “knowledge” that’s typically shared round search engine optimization boards with out little proof to substantiate it.

There are numerous research which have been launched over time that state details in regards to the top-ranking webpages, resembling “on common pages within the high 10 positions within the SERPs have over 1,450 phrases on them.”

It could be fairly straightforward for somebody to take this info in isolation and assume it signifies that pages want roughly 1,500 phrases to rank on Web page 1. That isn’t what the research is saying, nonetheless.

Sadly, that is an instance of correlation, not essentially causation.

Simply because the top-ranking pages in a specific research occurred to have extra phrases on them than the pages rating eleventh and decrease doesn’t make phrase rely a rating issue.

Mueller dispelled this fantasy but once more in a Google search engine optimization Workplace Hours in February 2021.

“From our viewpoint the variety of phrases on a web page will not be a high quality issue, not a rating issue.”

For extra info on how content material size can impression search engine optimization, try Sam Hollingsworth’s article.

9. LSI Key phrases Will Assist You Rank

What precisely are LSI key phrases? LSI stands for “latent semantic indexing.”

It’s a approach utilized in info retrieval that enables ideas inside the textual content to be analyzed and relationships between them recognized.

Phrases have nuances depending on their context. The phrase “proper” has a distinct connotation when paired with “left” than when it’s paired with “incorrect.”

People can rapidly gauge ideas in a textual content. It’s tougher for machines to take action.

The power of machines to know the context and linking between entities is key to their understanding of ideas.

LSI is a large step ahead for a machine’s capacity to know textual content. What it isn’t is synonyms.

Sadly, the sphere of LSI has been devolved by the search engine optimization neighborhood into the understanding that utilizing phrases which can be related or linked thematically will enhance rankings for phrases that aren’t expressly talked about within the textual content.

It’s merely not true. Google has gone far past LSI in its understanding of textual content with the introduction of BERT, as only one instance.

For extra about what LSI is and the way it does or doesn’t have an effect on rankings, check out this article.

10. search engine optimization Takes 3 Months

It helps us get out of sticky conversations with our bosses or purchasers. It leaves numerous wiggle room when you aren’t getting the outcomes you promised. “search engine optimization takes at the least three months to have an impact.”

It’s honest to say that there are some adjustments that can take time for the search engine bots to course of.

There’s then, in fact, a while to see if these adjustments are having a optimistic or unfavourable impact. Then extra time is perhaps wanted to refine and tweak your work.

That doesn’t imply that any exercise you perform within the title of search engine optimization goes to don’t have any impact for 3 months. Day 90 of your work is not going to be when the rating adjustments kick in. There’s much more to it than that.

If you’re in a really low-competition market, concentrating on area of interest phrases, you would possibly see rating adjustments as quickly as Google recrawls your web page. A aggressive time period might take for much longer to see adjustments in rank.

A research by Semrush recommended that of the 28,000 domains they analyzed, solely 19% of domains began rating within the high 10 positions inside six months and managed to take care of these rankings for the remainder of the 13-month research.

This research signifies that newer pages wrestle to rank excessive.

Nonetheless, there’s extra to search engine optimization than rating within the high 10 of Google.

For example, a well-positioned Google Enterprise Profile itemizing with nice opinions will pay dividends for a corporation. Bing, Yandex, and Baidu would possibly make it simpler on your model to beat the SERPs.

A small tweak to a web page title might see an enchancment in click-through charges. That may very well be the identical day if the search engine have been to recrawl the web page rapidly.

Though it will possibly take a very long time to see first web page rankings in Google, it’s naïve of us to cut back search engine optimization success simply right down to that.

Subsequently, “search engine optimization takes 3 months” merely isn’t correct.

11. Bounce Fee Is A Rating Issue

Bounce charge is the share of visits to your web site that end in no interactions past touchdown on the web page. It’s usually measured by a web site’s analytics program, resembling Google Analytics.

Some search engine optimization professionals have argued that bounce charge is a rating issue as a result of it’s a measure of high quality.

Sadly, it isn’t an excellent measure of high quality.

There are numerous the reason why a customer would possibly land on a webpage and depart once more with out interacting additional with the positioning. They could properly have learn all the knowledge they wanted on that web page and left the positioning to name the corporate and e-book an appointment.

In that occasion, the customer bouncing has resulted in a lead for the corporate.

Though a customer leaving a web page having landed on it may very well be an indicator of poor high quality content material, it isn’t at all times. Subsequently, it wouldn’t be dependable sufficient for a search engine to make use of as a measure of high quality.

“Pogo-sticking,” or a customer clicking on a search end result after which returning to the SERPs, could be a extra dependable indicator of the standard of the touchdown web page.

It could counsel that the content material of the web page was not what the consumer was after, a lot in order that they’ve returned to the search outcomes to seek out one other web page or re-search.

John Mueller cleared this up (once more) throughout Google Webmaster Central Workplace Hours in June 2020. He was requested if sending customers to a login web page would look like a “bounce” to Google and injury their rankings:

“So, I feel there’s a little bit of false impression right here, that we’re taking a look at issues just like the analytics bounce charge in terms of rating web sites, and that’s undoubtedly not the case.”

Again on one other Google Webmaster Central Workplace Hours in July 2018, he additionally stated:

“We strive to not use indicators like that in terms of search. In order that’s one thing the place there are many the reason why customers would possibly shuttle, or take a look at various things within the search outcomes, or keep simply briefly on a web page and transfer again once more. I feel that’s actually arduous to refine and say, “properly, we might flip this right into a rating issue.”

So, why does this maintain developing? Properly, for lots of people, it’s due to this one paragraph in Google’s How Search Works:

“Past taking a look at key phrases, our methods additionally analyze if content material is related to a question in different methods. We additionally use aggregated and anonymised interplay knowledge to evaluate whether or not Search outcomes are related to queries.”

The problem with that is that Google doesn’t specify what this “aggregated and anonymised interplay knowledge” is. This has led to numerous hypothesis and naturally, arguments.

My opinion? Till we now have some extra conclusive research, or hear one thing else from Google, we have to maintain testing to find out what this interplay knowledge is.

For now, relating to the normal definition of a bounce, I’m leaning in direction of “fantasy.”

In itself, bounce charge (measured by means of the likes of Google Analytics) is a really noisy, simply manipulated determine. May one thing akin to a bounce be a rating sign? Completely, however it should must be a dependable, repeatable knowledge level that genuinely measures high quality.

Within the meantime, in case your pages are usually not satisfying consumer intent, that’s undoubtedly one thing you should work on – not merely due to bounce charge.

Basically, your pages ought to encourage customers to work together, or if not that kind of web page, at the least depart your website with a optimistic model affiliation.

12. It’s All About Backlinks

Backlinks are necessary – that’s with out a lot competition inside the search engine optimization neighborhood. Nonetheless, precisely how necessary continues to be debated.

Some search engine optimization execs will let you know that backlinks are one of many many ways that can affect rankings, however they aren’t an important. Others will let you know it’s the one actual game-changer.

What we do know is that the effectiveness of hyperlinks has modified over time. Again within the wild pre-Jagger days, link-building consisted of including a hyperlink to your web site wherever you might.

Discussion board feedback had spun articles, and irrelevant directories have been all good sources of hyperlinks.

It was straightforward to construct efficient hyperlinks. It’s not really easy now.

Google has continued to make adjustments to its algorithms that reward higher-quality, extra related hyperlinks and disrespect or penalize “spammy” hyperlinks.

Nonetheless, the ability of hyperlinks to have an effect on rankings continues to be nice.

There can be some industries which can be so immature in search engine optimization {that a} website can rank properly with out investing in link-building, purely by means of the power of their content material and technical effectivity.

That’s not the case with most industries.

Related backlinks will, in fact, assist with rating, however they should go hand-in-hand with different optimizations. Your web site nonetheless must have related content material, and it have to be crawlable.

If you’d like your visitors to truly do one thing after they hit your web site, it’s undoubtedly not all about backlinks.

Rating is just one a part of getting changing guests to your website. The content material and usefulness of the positioning are extraordinarily necessary in consumer engagement.

Following the slew of Useful Content material updates and a greater understanding of what Google considers E-E-A-T, we all know that content material high quality is extraordinarily necessary.

Backlinks can undoubtedly assist to point {that a} web page could be helpful to a reader, however there are lots of different components that might counsel that, too.

13. Key phrases In URLs Are Very Vital

Cram your URLs filled with key phrases. It’ll assist.

Sadly, it’s not fairly as highly effective as that.

John Mueller has stated a number of instances that key phrases in a URL are a really minor, light-weight rating sign.

In a Google search engine optimization Workplace Hours in 2021, he affirmed once more:

“We use the phrases in a URL as a really, very light-weight issue. And from what I recall, that is primarily one thing that we might take into consideration once we haven’t had entry to the content material but.

So, if this is absolutely the first time we see this URL and we don’t know tips on how to classify its content material, then we would use the phrases within the URL as one thing to assist rank us higher.

However as quickly as we’ve crawled and listed the content material there, then we now have much more info.”

If you’re trying to rewrite your URLs to incorporate extra key phrases, you might be prone to do extra injury than good.

The method of redirecting URLs en masse must be when mandatory, as there’s at all times a threat when restructuring a website.

For the sake of including key phrases to a URL? Not value it.

14. Web site Migrations Are All About Redirects

search engine optimization professionals hear this too typically. If you’re migrating a web site, all you should do is bear in mind to redirect any URLs which can be altering.

If solely this one have been true.

Truly, web site migration is among the most fraught and sophisticated procedures in search engine optimization.

An internet site altering its structure, content material administration system (CMS), area, and/or content material can all be thought of a web site migration.

In every of these examples, there are a number of points that might have an effect on how the various search engines understand the high quality and relevance of the pages to their focused key phrases.

Consequently, there are quite a few checks and configurations that must happen if the positioning is to take care of its rankings and natural visitors – making certain monitoring hasn’t been misplaced, sustaining the identical content material concentrating on, and ensuring the search engine bots can nonetheless entry the proper pages.

All of this must be thought of when a web site is considerably altering.

Redirecting URLs which can be altering is a vital a part of web site migration. It’s by no means the one factor to be involved about.

15. Properly-Recognized Web sites Will At all times Outrank Unknown Web sites

It stands to motive {that a} bigger model may have assets that smaller manufacturers don’t. Consequently, extra will be invested in search engine optimization.

Extra thrilling content material items will be created, resulting in a better quantity of backlinks acquired. The model title alone can lend extra credence to outreach makes an attempt.

The true query is, does Google algorithmically or manually enhance large manufacturers due to their fame?

This one is a bit contentious.

Some folks say that Google favors large manufacturers. Google says in any other case.

In 2009, Google launched an algorithm replace named “Vince.” This replace had a huge effect on how manufacturers have been handled within the SERPs.

Manufacturers that have been well-known offline noticed rating will increase for broad aggressive key phrases. It stands to motive that model consciousness can assist with discovery by means of Search.

It’s not essentially time for smaller manufacturers to throw within the towel.

The Vince replace falls very a lot according to different Google strikes in direction of valuing authority and high quality.

Large manufacturers are sometimes extra authoritative on broad-level key phrases than smaller contenders.

Nonetheless, small manufacturers can nonetheless win.

Lengthy-tail key phrase concentrating on, area of interest product traces, and native presence can all make smaller manufacturers extra related to a search end result than established manufacturers.

Sure, the percentages are stacked in favor of huge manufacturers, however it’s not not possible to outrank them.

- Verdict: Not totally reality or fantasy.

16. Your Web page Wants To Embody ‘Close to Me’ To Rank Properly For Native search engine optimization

It’s comprehensible that this fantasy continues to be prevalent.

There’s nonetheless numerous give attention to key phrase search volumes within the search engine optimization trade, typically on the expense of contemplating consumer intent and the way the various search engines perceive it.

When a searcher is in search of one thing with native intent, i.e., a spot or service related to a bodily location, the various search engines will take this into consideration when returning outcomes.

With Google, you’ll doubtless see the Google Maps outcomes in addition to the usual natural listings.

The Maps outcomes are clearly centered across the location searched. Nonetheless, so are the usual natural listings when the search question denotes native intent.

So, why do “close to me” searches confuse some?

A typical key phrase analysis train would possibly yield one thing like the next:

- “pizza restaurant manhattan” – 110 searches per thirty days.

- “pizza eating places in manhattan” – 110 searches per thirty days.

- “finest pizza restaurant manhattan” – 90 searches per thirty days.

- “finest pizza eating places in manhattan” – 90 searches per thirty days.

- “finest pizza restaurant in manhattan”– 90 searches per thirty days.

- “pizza eating places close to me” – 90,500 searches per thirty days.

With search quantity like that, you’d assume [pizza restaurants near me] could be the one to rank for, proper?

It’s doubtless, nonetheless, that folks looking for [pizza restaurant manhattan] are within the Manhattan space or planning to journey there for pizza.

[pizza restaurant near me] has 90,500 searches throughout the USA. The chances are the overwhelming majority of these searchers are usually not in search of Manhattan pizzas.

Google is aware of this and, subsequently, will serve pizza restaurant outcomes related to the searcher’s location.

Subsequently, the “close to me” factor of the search turns into much less in regards to the key phrase and extra in regards to the intent behind the key phrase. Google will simply contemplate it to be the placement the searcher is in.

So, do you should embody “close to me” in your content material to rank for these [near me] searches?

No, you should be related to the placement the searcher is in.

17. Higher Content material Equals Higher Rankings

It’s prevalent in search engine optimization boards and X (formally Twitter) threads. The frequent grievance is, “My competitor is rating above me, however I’ve wonderful content material, and theirs is horrible.”

The cry is considered one of indignation. In spite of everything, shouldn’t engines like google reward websites for his or her “wonderful” content material?

That is each a fantasy and typically a delusion.

The standard of content material is a subjective consideration. If it’s your personal content material, it’s tougher nonetheless to be goal.

Maybe in Google’s eyes, your content material isn’t higher than your opponents’ for the search phrases you wish to rank for.

Maybe you don’t meet searcher intent in addition to they do. Perhaps you will have “over-optimized” your content material and diminished its high quality.

In some situations, higher content material will equal higher rankings. In others, the technical efficiency of the positioning or its lack of native relevance could trigger it to rank decrease.

Content material is one issue inside the rating algorithms.

18. You Want To Weblog Each Day

This can be a irritating fantasy as a result of it appears to have unfold outdoors of the search engine optimization trade.

Google loves frequent content material. You must add new content material or tweak current content material day by day for “freshness.”

The place did this concept come from?

Google had an algorithm replace in 2011 that rewards brisker ends in the SERPs.

It’s because, for some queries, the brisker the outcomes, the higher the probability of accuracy.

For example, when you seek for [royal baby] within the UK in 2013, you’ll be served with information articles about Prince George. Search it once more in 2015, and you will notice pages about Princess Charlotte.

In 2018, you’d see studies about Prince Louis on the high of the Google SERPs, and in 2019 it could be child Archie.

Should you have been to look [royal baby] in 2021, shortly after the delivery of Lilibet, then seeing information articles on Prince George would doubtless be unhelpful.

On this occasion, Google discerns the consumer’s search intent and decides displaying articles associated to the latest UK royal child could be higher than displaying an article that’s arguably extra rank-worthy because of authority, and many others.

What this algorithm replace doesn’t imply is that newer content material will at all times outrank older content material. Google decides if the “question deserves freshness” or not.

If it does, then the age of content material turns into a extra necessary rating issue.

Because of this if you’re creating content material purely to ensure it’s newer than opponents’ content material, you aren’t essentially going to learn.

If the question you wish to rank for doesn’t deserve freshness, i.e., [who is Prince William’s third child?] a truth that won’t change, then the age of content material is not going to play a major half in rankings.

If you’re writing content material day by day pondering it’s protecting your web site recent and, subsequently, extra rank-worthy, then you might be doubtless losing time.

It could be higher to write down well-considered, researched, and helpful content material items much less steadily and reserve your assets to make these extremely authoritative and shareable.

19. You Can Optimize Copy As soon as & Then It’s Carried out

The phrase “search engine optimization optimized” copy is a typical one in agency-land.

It’s used as a method to clarify the method of making copy that can be related to steadily searched queries.

The difficulty with that is that it means that after getting written that replicate – and ensured it adequately solutions searchers’ queries – you’ll be able to transfer on.

Sadly, over time, how searchers search for content material would possibly change. The key phrases they use, the kind of content material they need might alter.

The major search engines, too, could change what they really feel is probably the most related reply to the question. Maybe the intent behind the key phrase is perceived in another way.

The structure of the SERPs would possibly alter, that means movies are being proven on the high of the search outcomes the place beforehand it was simply webpage outcomes.

Should you take a look at a web page solely as soon as after which don’t proceed to replace it and evolve it with consumer wants, then you definitely threat falling behind.

20. Google Respects The Declared Canonical URL As The Most well-liked Model For Search Outcomes

This may be very irritating. You may have a number of pages which can be close to duplicates of one another. You realize which one is your foremost web page, the one you wish to rank, the “canonical.” You inform Google that by means of the specifically chosen “rel=canonical” tag.

You’ve chosen it. You’ve recognized it within the HTML.

Google ignores your needs, and one other of the duplicate pages ranks as a substitute.

The concept Google will take your chosen web page and deal with it just like the canonical out of a set of duplicates isn’t a difficult one.

It is smart that the web site proprietor would know finest which web page must be the one which ranks above its cousins. Nonetheless, Google will typically disagree.

There could also be situations the place one other web page from the set is chosen by Google as a greater candidate to point out within the search outcomes.

This may very well be as a result of the web page receives extra backlinks from exterior websites than your chosen web page. It may very well be that it’s included within the sitemap or is being linked to your foremost navigation.

Basically, the canonical tag is a sign – considered one of many who can be considered when Google chooses which web page from a set of duplicates ought to rank.

In case you have conflicting indicators in your website, or externally, then your chosen canonical web page could also be neglected in favor of one other web page.

Wish to know if Google has chosen one other URL to be the canonical regardless of your canonical tag? In Google Search Console, within the Index Protection report, you would possibly see this: “Duplicate, Google selected completely different canonical than consumer.”

Google’s help paperwork helpfully clarify what this implies:

“This web page is marked as canonical for a set of pages, however Google thinks one other URL makes a greater canonical. Google has listed the web page that we contemplate canonical relatively than this one.”

21. Google Has 3 Prime Rating Elements

It’s hyperlinks, content material, and Rank Mind, proper?

This concept that these are the three high rating components appears to come back from a WebPromo Q&A in 2016 with Andrei Lipattsev, a search high quality senior strategist at Google on the time (recovered by means of Wayback Machine; discover this dialogue at across the 30-minute mark).

When questioned on the “different two” high rating components, the questioner assumed that Rank Mind was one, Lipattsev acknowledged that hyperlinks pointing to a website, and content material have been the opposite two. He does make clear by saying:

“Third place is a hotly contested difficulty. I feel… It’s a humorous one. Take this with a grain of salt. […] And so I assume, when you do this, then you definitely’ll see parts of RankBrain having been concerned in right here, rewriting this question, making use of it like this over right here… And so that you’d say, ‘I see this two instances as typically as the opposite factor, and two instances as typically as the opposite factor’. So it’s someplace in quantity three.

It’s not like having three hyperlinks is ‘X’ necessary, and having 5 key phrases is ‘Y’ necessary, and RankBrain is a few ‘Z’ issue that can also be one way or the other necessary, and also you multiply all of that … That’s not how this works.”

Nonetheless it began, the idea prevails. An excellent backlink profile, nice copy, and “Rank Mind” kind indicators are what matter most with rankings, in response to many search engine optimization execs.

What we now have to think about when reviewing this concept is John Mueller’s response to a query in a 2017 English Google Webmaster Central office-hours hangout.

Mueller is requested if there’s a one-size-fits-all method to the highest three rating indicators in Google. His reply is a transparent “No.”

He follows that assertion with a dialogue across the timeliness of searches and the way that may require completely different search outcomes to be proven.

He additionally mentions that relying on the context of the search, completely different outcomes could must be proven, for example, model or purchasing.

He continues to clarify that he doesn’t assume that there’s one set of rating components that may be declared the highest three that apply to all search outcomes on a regular basis.

Throughout the “How Search Works” documentation it clearly states:

“To provide the most helpful info, Search algorithms take a look at many components and indicators, together with the phrases of your question, relevance and usefulness of pages, experience of sources, and your location and settings.

The burden utilized to every issue varies relying on the character of your question. For instance, the freshness of the content material performs an even bigger function in answering queries about present information matters than it does about dictionary definitions. ”

- Verdict: Not totally true or fantasy.

22. Use The Disavow File To Proactively Preserve A Web site’s Hyperlink Profile

To disavow or not disavow — this query has popped up lots over time since Penguin 4.0.

Some search engine optimization professionals are in favor of including any hyperlink that may very well be thought of spammy to their website’s disavow file. Others are extra assured that Google will ignore them anyway and save themselves the difficulty.

It’s undoubtedly extra nuanced than that.

In a 2019 Webmaster Central Workplace Hours Hangout, Mueller was requested in regards to the disavow software and whether or not we should always believe that Google is ignoring medium (however not very) spammy hyperlinks.

His reply indicated that there are two situations the place you would possibly wish to use a disavow file:

- In circumstances the place a guide motion has been given.

- And the place you would possibly assume if somebody from the webspam crew noticed it, they’d difficulty a guide motion.

You may not wish to add each spammy hyperlink to your disavow file. In observe, that might take a very long time if in case you have a really seen website that accrues hundreds of those hyperlinks a month.

There can be some hyperlinks which can be clearly spammy, and their acquisition will not be a results of exercise in your half.

Nonetheless, the place they’re a results of some less-than-awesome hyperlink constructing methods (shopping for hyperlinks, hyperlink exchanges, and many others.) you might wish to proactively disavow them.

Learn Roger Montti’s full breakdown of the 2019 change with John Mueller to get a greater thought of the context round this dialogue.

- Verdict: Not a fantasy, however don’t waste your time unnecessarily.

23. Google Values Backlinks From All Excessive Authority Domains

The higher the web site authority, the larger the impression it should have in your website’s capacity to rank. You’ll hear that in lots of search engine optimization pitches, shopper conferences, and coaching periods.

Nonetheless, that’s not the entire story.

For one, it’s debatable whether or not Google has an idea of area authority (see “Google Cares About Area Authority” above).

And extra importantly, it’s the understanding that there’s a lot that goes into Google’s calculations of whether or not a hyperlink will impression a website’s capacity to rank extremely or not.

Relevancy, contextual clues, no-follow hyperlink attributes. None of those must be ignored when chasing a hyperlink from a excessive “area authority” web site.

John Mueller additionally threw a cat among the many pigeons throughout a reside Search Off the Document podcast recorded at BrightonSEO in 2022 when he stated:

“And to some extent, hyperlinks will at all times be one thing that we care about as a result of we now have to seek out pages one way or the other. It’s like how do you discover a web page on the internet with out some reference to it?” However my guess is over time, it received’t be such a giant issue as typically it’s at present. I feel already, that’s one thing that’s been altering fairly a bit.”

24. You Can’t Rank A Web page With out Lightning-Quick Loading Velocity

There are numerous causes to make your pages quick: usability, crawlability, and conversion. Arguably, it is crucial for the well being and efficiency of your web site, and that must be sufficient to make it a precedence.

Nonetheless, is it one thing that’s completely key to rating your web site?

As this Google Search Central submit from 2010 suggests, it was undoubtedly one thing that factored into the rating algorithms. Again when it was revealed, Google acknowledged:

“Whereas website velocity is a brand new sign, it doesn’t carry as a lot weight because the relevance of a web page. Presently, fewer than 1% of search queries are affected by the positioning velocity sign in our implementation and the sign for website velocity solely applies for guests looking in English on Google.com at this level.”

Is it nonetheless solely affecting such a low share of tourists?

In 2021, the Google Web page Expertise system, which contains the Core Internet Vitals for which velocity is necessary, rolled out on cellular. It was adopted in 2022 with a rollout of the system to desktop.

This was met with a flurry of exercise from search engine optimization execs, attempting to prepare for the replace.

Many understand it to be one thing that might make or break their website’s rating potential. Nonetheless, over time, Google representatives have downplayed the rating impact of Core Internet Vitals.

Extra just lately, in Might 2023, Google launched Interplay to Subsequent Paint (INP) to the Core Internet Vitals to switch First Enter Delay (FID).

Google claims that INP helps to cope with a few of the constraints discovered with FID. This alteration in how a web page’s responsiveness is measured exhibits that Google nonetheless cares about precisely measuring consumer expertise.

From Google’s earlier statements and up to date give attention to Core Internet Vitals, we are able to see that load velocity continues to be an necessary rating issue.

Nonetheless, it is not going to essentially trigger your web site to dramatically enhance or lower in rankings.

Google representatives Gary Illyes, Martin Splitt, and John Mueller hypothesized in 2021 throughout a “Search off the Document” podcast in regards to the weighting of velocity as a rating issue.

Their dialogue drew out the pondering round web page load velocity as a rating metric and the way it could must be thought of a reasonably light-weight sign.

They went on to speak about it being extra of a tie-breaker, as you can also make an empty web page lightning-fast, however it is not going to serve a lot use for a searcher.

John Mueller bolstered this in 2022 throughout Google search engine optimization Workplace Hours when he stated:

“Core Internet Vitals is unquestionably a rating issue. Now we have that for cellular and desktop now. It’s based mostly on what customers really see and never form of a theoretical take a look at of your pages […] What you don’t are inclined to see is large rating adjustments general for that.

However relatively, you’d see adjustments for queries the place we now have related content material within the search outcomes. So if somebody is looking for your organization title, we might not present some random weblog, simply because it’s a little bit bit quicker, as a substitute of your homepage.

We’d present your homepage, even when it’s very sluggish. However, if somebody is looking for, I don’t know, trainers, and there are many folks writing about trainers, then that’s the place the velocity facet does play a bit extra of a job.”

With this in thoughts, can we contemplate web page velocity a serious rating issue?

My opinion is not any, web page velocity is unquestionably one of many methods Google decides which pages ought to rank above others, however not a serious one.

25. Crawl Price range Isn’t An Problem

Crawl finances – the concept that each time Googlebot visits your web site, there’s a restricted variety of assets it should go to – isn’t a contentious difficulty. Nonetheless, how a lot consideration must be paid to it’s.

For example, many search engine optimization professionals will contemplate crawl finances optimization a central a part of any technical search engine optimization roadmap. Others will solely contemplate it if a website reaches a sure measurement or complexity.

Google is an organization with finite assets. It can not presumably crawl each single web page of each website each time its bots go to them. Subsequently, a number of the websites that get visited may not see all of their pages crawled each time.

Google has helpfully created a information for homeowners of huge and steadily up to date web sites to assist them perceive tips on how to allow their websites to be crawled.

Within the information, Google states:

“In case your website doesn’t have numerous pages that change quickly, or in case your pages appear to be crawled the identical day that they’re revealed, you don’t must learn this information; merely protecting your sitemap updated and checking your index protection often is enough.”

Subsequently, it could appear that Google is in favor of some websites taking note of its recommendation on managing crawl finances, however doesn’t contemplate it mandatory for all.

For some websites, significantly ones which have a fancy technical setup and plenty of a whole lot of hundreds of pages, managing crawl finances is necessary. For these with a handful of simply crawled pages, it isn’t.

26. There Is A Proper Approach To Do search engine optimization

That is most likely a fantasy in lots of industries, however it appears prevalent in search engine optimization. There’s numerous gatekeeping in search engine optimization social media, boards, and chats.

Sadly, it’s not that easy.

We all know some core tenets about search engine optimization.

Often, one thing is acknowledged by a search engine consultant that has been dissected, examined, and finally declared true.

The remainder is a results of private and collective trial and error, testing, and expertise.

Processes are extraordinarily useful inside search engine optimization enterprise features, however they must evolve and be utilized appropriately.

Completely different web sites inside completely different industries will reply to adjustments in methods others wouldn’t. Altering a meta title so it’s underneath 60 characters lengthy would possibly assist the click-through charge for one web page and never for one more.

Finally, we now have to carry any search engine optimization recommendation we’re given calmly earlier than deciding whether or not it’s proper for the web site you might be engaged on.

When Can One thing Seem To Be A Delusion

Typically an search engine optimization approach will be written off as a fantasy by others purely as a result of they haven’t skilled success from finishing up this exercise for their very own website.

It is very important keep in mind that each web site has its personal trade, set of opponents, the expertise powering it, and different components that make it distinctive.

Blanket software of methods to each web site and anticipating them to have the identical consequence is naive.

Somebody could not have had success with a way after they have tried it of their extremely aggressive vertical.

It doesn’t imply it received’t assist somebody in a much less aggressive trade have success.

Causation & Correlation Being Confused

Typically, search engine optimization myths come up due to an inappropriate connection between an exercise that was carried out and an increase in natural search efficiency.

If an search engine optimization has seen a profit from one thing they did, then it’s pure that they’d advise others to strive the identical.

Sadly, we’re not at all times nice at separating causation and correlation.

Simply because rankings or click-through charges elevated across the identical time as you carried out a brand new tactic doesn’t imply it brought on the rise. There may very well be different components at play.

Quickly, an search engine optimization fantasy will come up from an overeager search engine optimization who desires to share what they incorrectly consider to be a golden ticket.

Steering Clear Of search engine optimization Myths

It may possibly prevent from experiencing complications, misplaced income, and a complete lot of time when you study to identify search engine optimization myths and act accordingly.

Check

The important thing to not falling for search engine optimization myths is ensuring you’ll be able to take a look at recommendation at any time when potential.

In case you have been given the recommendation that structuring your web page titles a sure approach will assist your pages rank higher for his or her chosen key phrases, then strive it with one or two pages first.

This can assist you measure whether or not making a change throughout many pages can be definitely worth the time earlier than you decide to it.

Is Google Simply Testing?

Typically, there can be a giant uproar within the search engine optimization neighborhood due to adjustments in the best way Google shows or orders search outcomes.

These adjustments are sometimes examined within the wild earlier than they’re rolled out to extra search outcomes.

As soon as a giant change has been noticed by one or two search engine optimization execs, recommendation on tips on how to optimize for it begins to unfold.

Keep in mind the favicons within the desktop search outcomes? The upset that brought on the search engine optimization trade (and Google customers on the whole) was huge.

Immediately, articles sprang up in regards to the significance of favicons in attracting customers to your search outcomes. There was barely time to check whether or not favicons would impression the click-through charge that a lot.

As a result of identical to that, Google modified it again.

Earlier than you bounce for the newest search engine optimization recommendation being unfold round Twitter on account of a change by Google, wait to see if it should maintain.

It may very well be that the recommendation that seems sound now will rapidly turn into a fantasy if Google rolls again adjustments.

Extra assets:

Featured Picture: Search Engine Journal/Paulo Bobita

[ad_2]